The How2 Challenge

New Tasks for Vision and Language

ICML 2019 Workshop, Long Beach, California

Research at the intersection of vision and language has attracted an increasing amount of attention over the last ten years. Current topics include the study of multi-modal representations, translation between modalities, bootstrapping of labels from one modality into another, visually-grounded question answering, embodied question-answering, segmentation and storytelling, and grounding the meaning of language in visual data. Still, these tasks may not be sufficient to fully exploit the potential of vision and language data.

To support research in this area, we recently released the How2 data-set, containing 2000 hours of how-to instructional videos, with audio, subtitles, Brazilian Portuguese translations, and textual summaries, making it an ideal resource to bring together researchers working on different aspects of multimodal learning. We hope that a common dataset will facilitate comparisons of tools and algorithms, and foster collaboration.

We are organizing a workshop at ICML 2019, to bring together researchers and foster the exchange of ideas in this area. We invite papers that participate in the How2 Challenge and also those that use other public or private multimodal datasets.

For any questions, contact us at how2challenge@gmail.com. Subscribe to the How2 mailing list here.

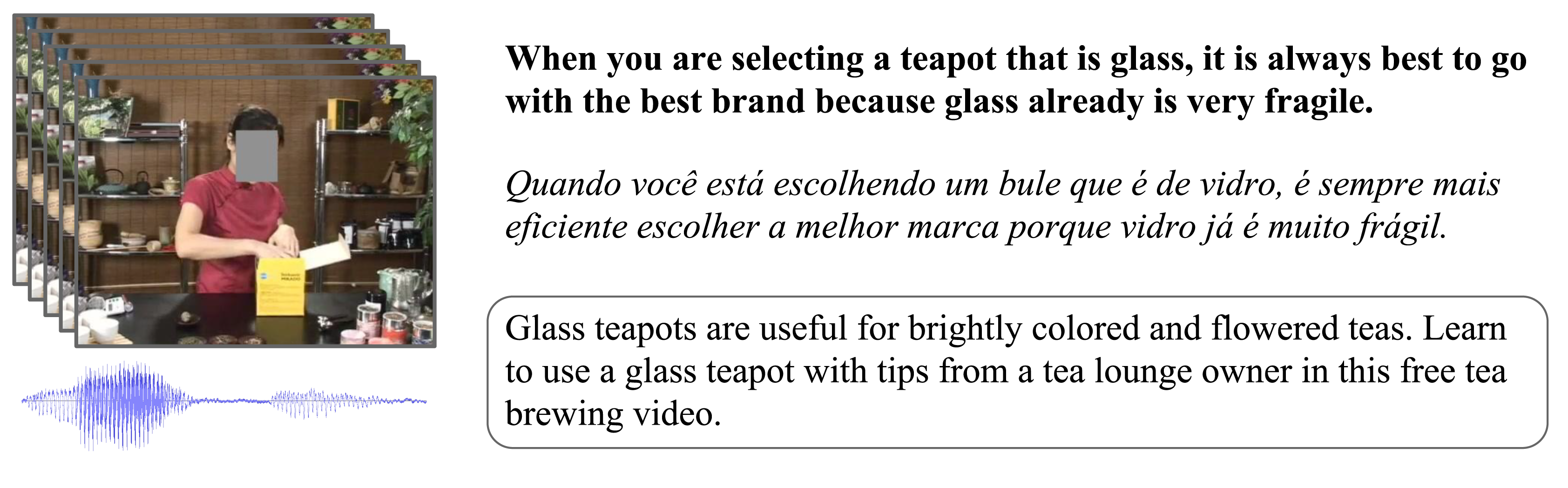

How2 contains a large variety of instructional videos with utterance-level English subtitles (in bold), aligned Portuguese translations (in italics), and video-level English summaries (in the box). Multimodality helps resolve ambiguities and improves understanding.

Get the How2 dataset and start exploring!

Paper | Request Dataset | Learn more

Important Dates

Please note these dates are tentative and may be changed. Submission deadlines are end of day “AoC” (anywhere on earth). We will update this web page as needed.

Challenge starts: March 15, 2019

Deadline for paper submission: May 15, 2019

Author Notification: May 22, 2019

Challenge ends: June 13, 2019

Camera ready paper submission: June 13, 2019

Workshop: June 15, 2019 (see the ICML web page)

Call For Papers

We seek submissions in the following two categories (or tracks):

-

Firstly, papers that describe work on the How2 data, either on the shared challenge tasks, e.g. multi-modal speech recognition (Palaskar et al. ICASSP 2018, Caglayan et al. ICASSP 2019), machine translation (Shared task on Multimodal MT), or video summarization (Libovicky et al. ViGIL, NeurIPS 2018), or creating “un-shared”, novel tasks that create language, speech and/or vision.

Examples of novel tasks could be spoken language translation, cross-modal multimodal learning, unsupervised representation learning (Holzenberger et al. ICASSP 2019), reasoning in vision and language, visual synthesis from language, vision and language interaction for humans, learning from in-the-wild videos (How2 data or others), lip reading, audio-visual scene understanding, sound localization, multimodal fusion, visual question answering, and many more.

-

Secondly, papers that describe other related and relevant work to further vision and language ideas by proposing new tasks, or analyzing the utility of existing tasks and data sets in interesting ways

We encourage both the publication of novel work that is relevant to the topics of discussion, and late-breaking results on the How2 tasks in a single format. We aim to stimulate discussion around new tasks that go beyond image captioning and visual question answering, and which could form the basis for future research in this area.

We will have a limited number of travel grants available for students that are first authors of a paper and are going to present it at the workshop! Please tick the box during submission.

Style and Author Guidelines

We follow the same style guidelines as ICML. The maximum paper length is 8 pages excluding references and acknowledgements, and 12 pages including references and acknowledgements. The use of 10-point Times font is mandatory.

Please see the LaTeX style files. The submissions will be single blind.

Paper Submission Portal

Click here to begin your submission. Choose either the How2 Challenge track (if using How2 dataset) or regular paper track.

Challenge Leaderboard

The How2 Challenge has three tasks: Speech Recognition, Machine Translation, and Summarization.

To submit to the challenge, you could either email your un-scored model outputs to us at how2challenge@gmail.com or submit your evaluated files through the Google Forms below. Visit the How2 evaluate page for more instructions.

Speech Recognition

| Rank | Model | WER (%) | Verified |

|---|---|---|---|

| 1 | Multimodal Ensemble Caglayan et al. 2019 |

15.1 | |

| 2 | Unimodal Ensemble Caglayan et al. 2019 |

15.6 | |

| 3 Baseline |

Multimodal Model Sanabria et al. 2018 |

18.0 | |

| 4 Baseline |

Unimodal Model Sanabria et al. 2018 |

19.4 |

Machine Translation

| Rank | Model | BLEU | Verified |

|---|---|---|---|

| 1 | Attention over Image Features Wu et al. 2019, Imperial College London |

56.2 | |

| 2 | Unimodal SPM Transformer Raunak et al. 2019, CMU |

55.5 | |

| 3 Baseline |

Unimodal Model Sanabria et al. 2018 |

54.4 | |

| 3 Baseline |

Multimodal Model Sanabria et al. 2018 |

54.4 | |

| 5 | BPE Multimodal Lal et al. 2019, JHU |

51.0 |

Summarization

| Rank | Model | Rouge-L | Verified |

|---|---|---|---|

| 1 Baseline |

Multimodal Model (Hierarchical Attention) Libovicky et al. 2018 |

54.9 | |

| 2 Baseline |

Unimodal Model Libovicky et al. 2018 |

53.9 |

Planned Activities (Tentative)

- A series of presentations on the How2 Challenge Tasks, based on invited papers

- Posters from How2 challenge participants to encourage in-depth discussion

- Invited speakers to present other viewpoints and complementary work

- A moderated round table discussion to develop future directions

Schedule

Please find up-to-date information at the workshop’s ICML web site.

Accepted Papers

- Grounded Video Description

Luowei Zhou, Yannis Kalantidis, Xinlei Chen, Jason J. Corso and Marcus Rohrbach [pdf]

Regular Track - Multi-modal Content Localization in Videos Using Weak Supervision

Gourab Kundu, Prahal Arora, Ferdi Adeputra, Larry Anazia, Geoffrey Zweig, Michelle Cheung and Daniel Mckinnon [pdf]

Regular Track - Learning Visually Grounded Representations with Sketches

Roma Patel, Stephen Bach and Ellie Pavlick [pdf]

Regular Track - Predicting Actions to Help Predict Translations

Zixiu Wu, Julia Ive, Josiah Wang, Pranava Madhyastha and Lucia Specia [pdf]

How2 Track - Grounding Object Detections With Transcriptions

Yasufumi Moriya, Ramon Sanabria, Florian Metze and Gareth Jones [pdf]

Regular Track - On Leveraging Visual Modality for ASR Error Correction

Sang Keun Choe, Quanyang Lu, Vikas Raunak, Yi Xu and Florian Metze [pdf]

How2 Track - On Leveraging the Visual Modality for Neural Machine Translation: A Case Study on the How2 Dataset

Vikas Raunak, Sang Keun Choe, Yi Xu, Quanyang Lu and Florian Metze [pdf]

How2 Track - E-Sports Talent Scouting Based on Multimodal Twitch Stream Data

Anna Belova, Wen He and Ziyi Zhong [pdf]

Regular Track - Analyzing Utility of Visual Context in Multimodal Speech Recognition Under Noisy Conditions

Tejas Srinivasan, Ramon Sanabria and Florian Metze [pdf]

Regular Track - Text-contextualized Adaptation For Lecture Automatic Speech Recognition

Saurabh Misra, Ramon Sanabria and Florian Metze

Regular Track

Invited Speakers

Organizers

For any questions, contact us at how2challenge@gmail.com.

Program Committee

- Ozan Çağlayan

- Raffaella Bernardi

- Guillem Collell

- Spandana Gella

- David Hogg

- Markus Müller

- Thales Bertaglia

- Aditya Mogadala

- Siddharth Narayanaswamy

- Nils Holzenberger

- Amanda Duarte

- Herman Kamper

References

1. Palaskar et al. "End-to-End Multimodal Speech Recognition", ICASSP 2018

2. Caglayan et al. "Multimodal Grounding for Sequence-to-Sequence Speech Recognition", ICASSP 2019

3. Elliott et al. "Findings of the Second Shared Task on Multimodal Machine Translation and Multilingual Image Description", WMT 2017

4. Shared Task on Multimodal Machine Translation, Workshop on Machine Translation [Webpage]

5. Libovicky et al. "Multimodal Abstractive Summarization for Open-Domain Videos", ViGIL Workshop, NeurIPS 2018

6. Holzenberger et al. "Learning from Multiview Correlations in Open-Domain Videos", ICASSP 2019

7. Sanabria et al. "How2: A Large-scale Dataset for Multimodal Language Understanding", ViGIL Workshop, NeurIPS 2018